Data is altering the way the world works. Data is responsible for everything, whether it is a study on disease remedies, a company’s revenue strategy, efficient building construction, or those targeted ads on your social network page. This data is information that is machine-readable rather than human-readable. This is when Data Modeling enters the picture. It is the process by which data is assigned relational rules. A Data Model simplifies data and turns it into meaningful information that businesses may utilize for decision-making and strategy. This article will provide you with a full and broad explanation of how data modeling works, the numerous types of data modeling, and how it may assist your business.

What is a Data Model?

Good data enables organizations to set baselines, benchmarks, and targets in order to keep moving forward. To allow this measuring, data must be organized through data description, data semantics, and data consistency constraints. A Data Model is an abstract model that enables for the continued development of conceptual models and the establishment of linkages between data objects.

A company may have a massive data bank, but if there is no standard to verify the basic quality and interpretability of the data, it is useless. A solid data model assures actionable downstream outputs, knowledge of best practices for data, and access to the best tools.

Let us now look at the many types and procedures of data modeling.

What is Data Modeling?

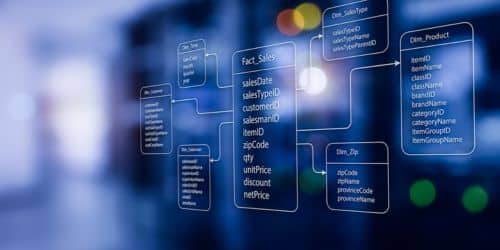

In software engineering, data modeling is the process of simplifying a software system’s diagram or data model using formal techniques. It entails communicating data and information using text and symbols. The data model serves as the template for developing new databases or reengineering legacy applications.

Given the foregoing, it is the first and most important stage in establishing the structure of available data. Data modeling is the process of developing data models in which data relationships and restrictions are documented and then coded for reuse. To depict the interrelationship, it theoretically expresses data with diagrams, symbols, or text.

Thus, data modeling aids in increasing consistency in nomenclature, rules, semantics, and security. As a result, data analytics improves. The emphasis is on the necessity for data availability and organization, regardless of how it is used.

Process of Data Modeling

Data modeling is the process of developing a conceptual representation of data objects and their interrelationships. The data modeling process typically consists of numerous parts, including requirements gathering, conceptual design, logical design, physical design, and implementation.

Data modelers collaborate with stakeholders at each stage of the process to understand the data requirements, define the entities and attributes, establish the relationships between the data objects, and create a model that accurately represents the data in a way that application developers, database administrators, and other stakeholders can use.

Why is Data Modeling Important?

You will describe what data you have, how you utilize it, and what your requirements are for usage, protection, and governance by modeling your data. Your company can use data modeling to:

- Creates a framework for collaboration between your IT and business departments.

- Identifies potential for improving business operations by specifying data requirements and applications.

- Saves time and money on IT and process investments by preparing ahead of time.

- Errors (and error-prone redundant data entry) are reduced, while data integrity is improved.

- Planning for capacity and development, it improves the speed and performance of data retrieval and analytics.

- Sets and monitors target key performance indicators based on your company’s goals.

So it’s not just about what you receive through data modeling, but also about how you acquire it. The process itself has numerous advantages.

The Benefits of Data Modeling

Data modeling is an essential process in the creation of any software program or database system. Among the advantages of data modeling are:

- Data modeling aids stakeholders in better understanding the structure and relationships of data, which can help to influence decisions about how to utilize and store data.

- Improved data quality: Data modeling can assist in identifying flaws and inconsistencies in data, which can enhance overall data quality and prevent problems in the future.

- Data modeling facilitates communication and collaboration among stakeholders, which can lead to more effective decision-making and better outcomes.

- Increased efficiency: Data modeling can help to streamline the development process by providing developers, database administrators, and other stakeholders with a clear and consistent representation of the data.

Data Modeling’s Limitations

Despite the numerous advantages of data modeling, there are some limitations and obstacles to be aware of. Some of the limits of data modeling are as follows:

- Inflexible data models: Data models can be rigid, making it difficult to adjust to changing requirements or data formats.

- Complexity: Because data models can be complex and difficult to grasp, stakeholders may struggle to provide input or collaborate effectively. Data modeling can be a time-consuming process, particularly for big or complex datasets.

Data Modeling Types

Organizations utilize three different types of data models. These are created during the planning stages of an analytics project. They span from abstract to discrete requirements, entail inputs from a specified subset of stakeholders, and fulfill various functions.

#1. Conceptual Model

It is a graphical depiction of database ideas and their relationships, indicating the high-level user perspective of data. It concentrates on establishing entities, attributes of an entity, and relationships between them rather than the intricacies of the database itself.

#2. Logical Model

This model specifies the structure of the data entities and their relationships in greater detail. A logical data model is typically utilized for a specific project since the goal is to create a technical map of rules and data structures.

#3. The Physical Model

This is a framework or schema that defines how data is physically stored in a database. It is used for database-specific modeling in which the columns contain accurate types and properties. The internal schema is designed by a physical model. The goal is to actually implement the database.

The logical vs. physical data model distinction is distinguished by the fact that the logical model extensively explains the data but does not participate in database implementation, whereas the physical model does. In other words, the logical data model serves as the foundation for creating the physical model, which provides an abstraction of the database and aids in the generation of the schema.

Employee management systems, simple order management, hotel reservations, and so on are examples of conceptual data modeling. These examples demonstrate how this data model is used to communicate and define the database’s business requirements, as well as to present concepts. It is not intended to be technical, but rather straightforward.

Let us now look at the techniques.

Data Modeling Techniques

Three fundamental data modeling techniques exist. The Entity-Relationship Diagram, or ERD, is a technique for modeling and designing relational or conventional databases. Second, the Unified Modeling Language Class Diagrams, or UML, is a standardized family of notations for modeling and designing information systems. Finally, the final modeling technique is Data Dictionary modeling, which involves tabular definition or representation of data assets.

Best Data Modeling Practices in 2023

When starting a data modeling project or assignment, keep the following recommended practices in mind:

#1. Create a data model for visualization.

It’s unlikely that staring at endless columns and rows of alphanumeric entries will result in enlightenment. Many consumers are at ease when they see graphical data visualizations that highlight any abnormalities or when they use straightforward drag-and-drop screen interfaces to swiftly assess and merge data tables.

You can clean your data using data visualization techniques like these to make it complete, error-free, and redundant-free. Furthermore, they assist in recognizing different data record types that are equal to the same physical item so that they can be translated into standardized fields and formats to enable the fusion of multiple data sources.

#2. Recognize the needs of the company and strive for meaningful results.

The purpose of data modeling is to help an organization perform more successfully. The most major issue posed by data modeling, from the perspective of a qualified expert, is the precise capture of business demands. This is required to identify which data should be collected, stored, updated, and made available to users.

You can acquire a full grasp of the demands by questioning users and stakeholders about the results they require from the data. Begin organizing your data with these objectives in mind. It is advisable to begin strategically designing your data sets with the needs of users and stakeholders in mind.

#3. Create a single source of truth.

Bring all raw data from your sources into your database or data warehouse. If you rely only on “ad-hoc” data extraction from the source, the flow of your data model may be hampered. If you employ the whole pool of raw data kept in your centralized hub, you will have access to all past data.

Applying logic to data acquired directly from a source and doing calculations on it can have a severe influence, if not ruin, your entire model. It is also incredibly tough to repair or sustain if something goes wrong throughout the process.

#4. Begin with rudimentary data modeling and work your way up.

Data can become extremely complicated very fast due to factors such as quantity, nature, structure, growth rate, and query language. When data models are kept simple and modest at initially, it is easier to address problems and take the right measures.

After you are certain that your original models are correct and significant, you can add fresh datasets, eliminating any discrepancies along the way. Look for a program that is simple to use at first but can subsequently support very massive data models. It should also allow you to swiftly aggregate data from several physical places.

#5. Before proceeding, double-check each stage of your data modeling process.

Each activity should be double-checked before proceeding to the next stage, beginning with the data modeling priorities based on business needs. Choosing a primary key for a dataset, for example, ensures that the value of the primary key in that record may be uniquely recognized.

The same method can be used to integrate two datasets to determine whether they have a one-to-one or one-to-many relationship and to avoid many-to-many interactions that result in overly complex or unmanageable data models.

#6. Sort business inquiries by dimensions, data, filters, and order.

By understanding how these four variables can be used to explain business queries, well-organized data sets to aid in the formulation of business questions. For example, if a retail company has locations all over the world, the best-performing ones in the preceding year can be identified.

The facts would be sets of historical sales data, the dimensions would be the product and the shop location, the filter would be “last 12 months,” and the order would be “best five stores in declining order of sales.” By carefully organizing your data sets and leveraging distinct tables for dimensions and facts, you can help the research by identifying the top sales performers for each quarter and accurately responding to additional business intelligence inquiries.

#7. Make calculations ahead of time to avoid disagreements with end users.

It is critical to have a single truth version against which users can do business. Even if people disagree on how it should be used, there should be no disagreement about the underlying information or the math used to arrive at the answer. For example, a calculation may be required to convert daily sales data into monthly values that can then be compared to determine the best and worst months.

Instead of requiring everyone to use their own calculators or spreadsheet tools, a company can avoid difficulties by incorporating this computation into its data modeling in advance.

#8. Look for a relationship rather than a correlation.

Instructions on how to use the modeled data are included in data modeling. Allowing consumers to access business analytics on their own is a significant step, but it’s as crucial that they don’t jump to false conclusions.

It’s feasible, for example, if we look at how the sales of two unrelated products appear to increase and decline together. Are one item’s revenues driving sales of another, or do they fluctuate in response to external factors such as the economy and the weather? In this case, a perplexing link and connection may be focused in the wrong way, consuming resources.

#9. Use modern tools and strategies to complete challenging tasks.

Before performing more extensive data modeling, programming can be used to prepare data sets for analysis. But what if there was a tool or app that could manage such complex tasks? People are no longer required to learn multiple coding languages, which frees up your time to focus on tasks that benefit your company.

Specialized software, such as Extract, Transform, and Load (ETL) tools, may facilitate or automate all data extraction, transformation, and loading processes. A drag-and-drop interface can also be utilized to combine many data sources, and data modeling can even be automated.

#10. Improved data modeling for better business outcomes

Data modeling that supports users in quickly obtaining answers to their business concerns may improve the company’s performance in areas such as effectiveness, yield, competency, and customer happiness, among others.

Technology can be used to accelerate the phases of investigating data sets for answers to all inquiries, as well as in relation to corporate objectives, business goals, and tools. It also entails allocating data priorities for specific corporate tasks. Once you have met these scenarios, your firm will be able to more reliably forecast the important values and productivity benefits that data modeling will provide.

#11. Validate and test your data analytics application.

Test your analytics system in the same way you would any other built-and-implemented functionality. It should be evaluated to see if the total amount and accuracy of data collected are correct. Consider whether your data is well arranged and allows you to achieve a critical measure. You can also write some queries to have a better understanding of how it will work and apply. Furthermore, we recommend developing a number of projects to verify your execution and implementation.

How Does Data Modeling Affect Analytics?

Data modeling and data analytics are inextricably linked because a quality data model is required to obtain the most impactful analytics for business intelligence that guides decision-making. The process of developing data models is a compulsion that forces each business unit to consider how they contribute to overall corporate goals. Furthermore, a good data model ensures efficient analytics performance regardless of how huge and complex your data estate is—or gets.

When all of your data is properly defined, evaluating only the data you require becomes considerably easier. Because you’ve previously established the linkages between data attributes, it’s straightforward to evaluate and see the effects of changing processes, prices, or staffing.

Selecting a Data Modeling Tool

The good news is that a great business intelligence tool will include all of the data modeling tools you require, with the exception of the exact software products and services you select to develop your physical model. So you can pick the one that best meets your company’s needs and existing infrastructure. When considering a data analytics tool for its data modeling and analytics capabilities, ask yourself these questions.

#1. Is your data modeling tool easy to use?

The technical people implementing the model may be able to handle whatever tool you throw at them, but your business strategists and everyday analytics users—and your entire organization—will not get the most out of the tool if it is difficult to use. Look for an easy-to-use user interface that will assist your team with data storytelling and data dashboards.

#2. How effective is your data modeling tool?

Another critical characteristic is performance—speed and efficiency, which translate into the capacity to keep the business running smoothly while your users run analysis. The best-planned data model isn’t the best if it can’t withstand the rigors of real-world situations, which should include corporate development and increasing volumes of data, retrieval, and analysis.

#3. Is your data modeling tool in need of upkeep?

If every change to your business model necessitates time-consuming modifications to your data model, your company will not benefit from the model or the related analytics. Look for a solution that makes maintenance and upgrades simple, so your company may pivot as needed while still having access to the most recent data.

#4. Will your data be protected?

Government requirements need you to secure your customers’ data, but your company’s viability demands you protect all of your data as a valuable asset. You should ensure that the tools you chose include strong security features, such as controls for providing access to those who need it and prohibiting those who don’t.

What is the Most Important Factor to Consider While Modeling Data?

The primary goal of data modeling is to lay the groundwork for a database that can rapidly load, retrieve, and analyze massive amounts of data. An effective data modeling idea necessitates the mapping of corporate data, data linkages, and how the data is used.

How Frequently Should a Data Model be Retrained?

The frequency with which a data model should be retrained varies depending on the model and the problem it aids in solving. Based on how frequently training data sets change, whether model performance has dropped, and other factors, a model may need to be retrained daily, weekly, or more frequently, such as monthly or annually.

What Is Data Model Validation?

The process of data model validation ensures that the model is appropriately constructed and can serve its intended function. A good data modeling tool makes the validation process easier by sending automated notifications that prompt users to repair problems, improve queries, and make other adjustments.

What Are the Fundamental Data Modeling Concepts?

There are three types of database modeling concepts: conceptual data modeling, logistical data modeling, and physical data modeling. Data modeling concepts, which range from abstract to discrete, establish a blueprint for how data is organized and managed in an organization.

Summary

A well-planned and comprehensive data model is essential for the creation of a really effective, useful, secure, and accurate database.

Good data modeling and database design are critical for developing functional, dependable, and secure application systems and databases that operate well with data warehouses and analytical tools – and ease data sharing with business partners and across numerous application sets. Well-thought-out data models help to assure data integrity, increasing the value and reliability of your company’s data.

Related Articles

- Predictive Analytics: Definition, Examples, and Benefits

- Business Intelligence Analyst: Job Description, Certifications & Salary In the US

- Best Data Management Software For Your Small Business In 2023

- IASB (International Accounting Standards Board) Roles, Framework & Benefits